17× Greener: How One AI Model Could've Saved 15,225 kg CO₂e

Training AI models in cleaner regions can cut emissions by over 90%. Stable Diffusion could have saved 15,225 kg CO₂e using smarter compute and saved $150k.

The Problem – Dirty AI

Most AI training runs on dirty, fossil fueled energy and it’s expensive.

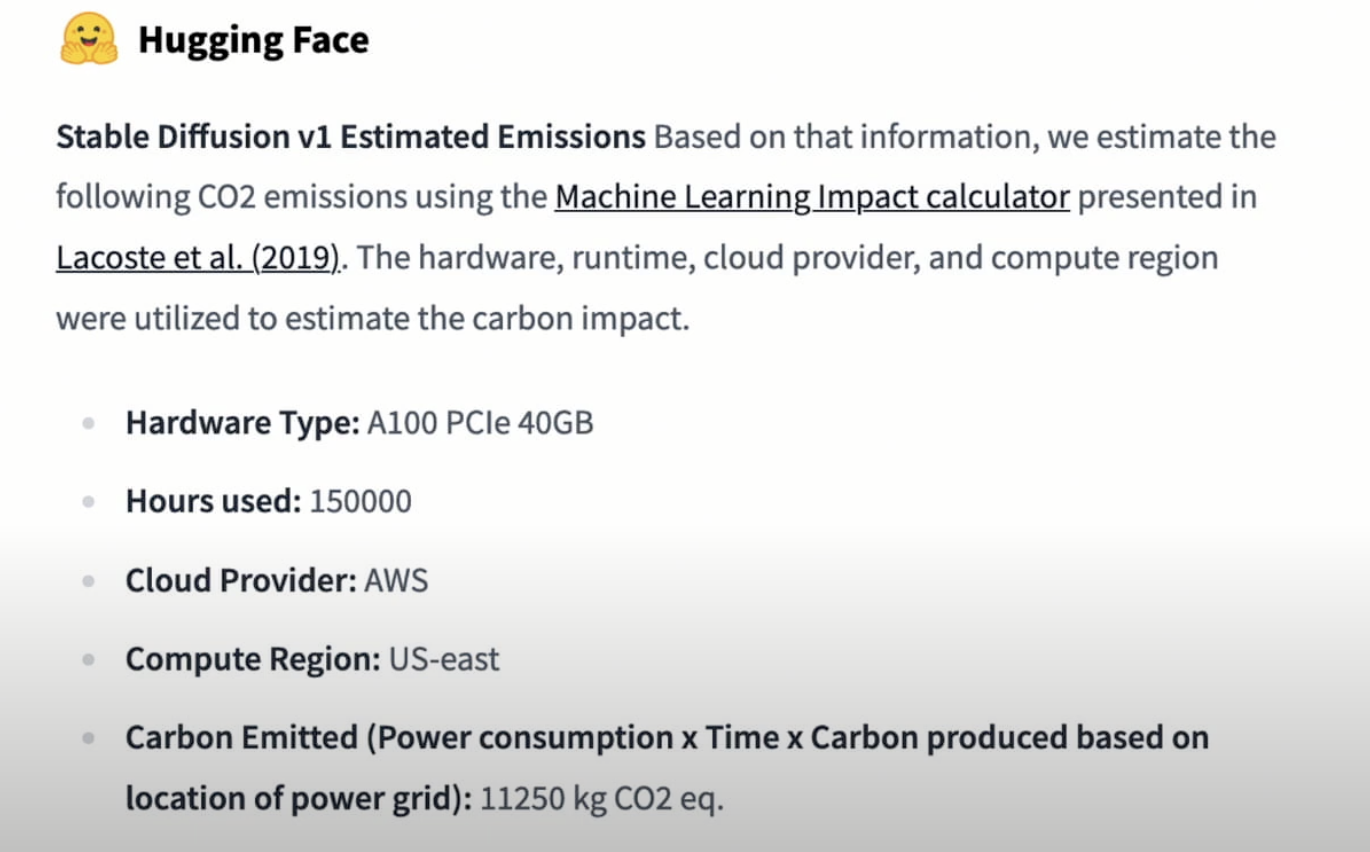

Stable Diffusion; a popular text-to-image model recently trained their v.1.4 model on:

- Hardware: A100 PCIe 40GB

- Usage: 150,000 hours

- Cloud Provider: AWS

- Region: US-East (N. Virginia)

- Grid Intensity: 430gCO₂e/kWh

- Total Emissions: ~16,125 kg CO₂e

- Total Cost: $600,000 (Yikes!)

Confirmed by Emad Mostaque, former CEO of Stability AI, the next version (0.5) of Stable Diffusion cost an eye-watering $600,000 to train. We can reasonably estimate that version 0.4 had a similar cost profile, likely running at around $4/hour; highlighting just how expensive training large AI models can be.

The Solution – Cleaner compute

Imagine running the same job... in a region where the grid intensity is 17× cleaner, greener and low-carbon...

If we ran this job in Paris (24gCO2/kWh). It would have saved 15,225 kg CO₂e.

Same job. Same cloud. Smarter infrastructure.

This is the power of carbon-aware compute.

Conclusion

It’s hard to find GPUs in clean, low-carbon regions, but it’s possible, and the benefits aren’t just environmental.

Running this model in a region like Paris would have produced just 900 kg of CO₂e, compared to 16,125 kg in us-east-1. That’s over a 90% reduction in emissions, equivalent to 15 flights from New York to London.

Shopping around for different providers offering similar boxes is well worth it, using potential cost saving of over $150,000.

CarbonRunner doesn’t just bring carbon-aware infrastructure to CI/CD workflows on GitHub Actions, we also offer a powerful API that helps you train AI/ML models in the lowest-carbon regions across multiple clouds.

We arbitrage across multiple providers in real time to surface the best-value, lowest-emissions boxes, so you don’t just save carbon, you save money too.

Want to Shift AI Workloads to Cleaner Grids?

Stable Diffusion burned 15,225 kg CO₂e on US-East. The same training on a low-carbon grid would've cut emissions by 94%. Clean compute isn't slower — just smarter and cheaper!